A PyQt widget for OpenCV camera preview

This is a hands-on post where I’ll show how to create a PyQt widget for previewing frames captured from a camera using OpenCV. In the process, it’ll be clear how to use OpenCV images with PyQt. I’ll not explain what PyQt and OpenCV are because if you don’t know them yet, you probably don’t need it and this post is not for you =P.

The main reason for integrating PyQt and OpenCV is to provide a more sophisticated UI for applications using OpenCV as basis for computer vision tasks. In my specific case, I needed it in the facial recognition prototype used in the interview for Globo TV I posted last week. I usually don’t write about such specific things, but there’s little information about it on the net. So I’d like to make a contribution sharing my findings.

The first problem to be solved is to show a cv.iplimage (the type of an OpenCV image in Python) object in a generic PyQt widget. This is easily solved by inheriting from QtGui.QImage this way:

import cv

from PyQt4 import QtGui

class OpenCVQImage(QtGui.QImage):

def __init__(self, opencvBgrImg):

depth, nChannels = opencvBgrImg.depth, opencvBgrImg.nChannels

if depth != cv.IPL_DEPTH_8U or nChannels != 3:

raise ValueError("the input image must be 8-bit, 3-channel")

w, h = cv.GetSize(opencvBgrImg)

opencvRgbImg = cv.CreateImage((w, h), depth, nChannels)

# it's assumed the image is in BGR format

cv.CvtColor(opencvBgrImg, opencvRgbImg, cv.CV_BGR2RGB)

self._imgData = opencvRgbImg.tostring()

super(OpenCVQImage, self).__init__(self._imgData, w, h, \

QtGui.QImage.Format_RGB888)

The important lines here are 14-17:

- Line 14 converts the image from BGR to RGB format. OpenCV images loaded from files or queried from the camera often (not always; adapt it to your scenario) are delivered in BGR format, which is not what PyQt expects. Thus, I convert the image to RGB before going on. What happens if you skip this step? Well… nothing critical… the image will be shown with the channels R and B flipped.

- Line 15 saves a reference to the

opencvRgbImgbyte-content to prevent the garbage collector from deleting it when__init__returns. This is very important. - Lines 16-17 call the

QtGui.QImagebase class constructor passing the byte-content, dimensions and format of the image.

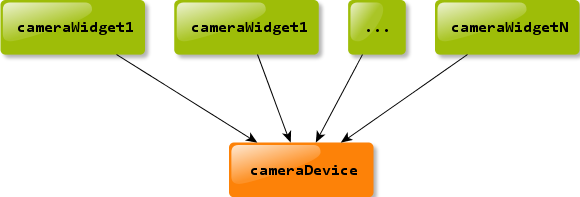

Done! If all you want is to show an OpenCV image in a PyQt widget, that’s all you need. However, for a camera preview it’s better to have a more convenient and complete API. Specifically, it must be straightforward to connect a camera device to a widget and still keep them decoupled. There should be one camera device, which can be seen as a frame provider, to many widgets:

And the frames must be manipulated independently by the widgets, i.e., the widgets must have complete control over the frames delivered to them.

The camera device can be encapsulated like this:

import cv

from PyQt4 import QtCore

class CameraDevice(QtCore.QObject):

_DEFAULT_FPS = 30

newFrame = QtCore.pyqtSignal(cv.iplimage)

def __init__(self, cameraId=0, mirrored=False, parent=None):

super(CameraDevice, self).__init__(parent)

self.mirrored = mirrored

self._cameraDevice = cv.CaptureFromCAM(cameraId)

self._timer = QtCore.QTimer(self)

self._timer.timeout.connect(self._queryFrame)

self._timer.setInterval(1000/self.fps)

self.paused = False

@QtCore.pyqtSlot()

def _queryFrame(self):

frame = cv.QueryFrame(self._cameraDevice)

if self.mirrored:

mirroredFrame = cv.CreateImage(cv.GetSize(frame), frame.depth, \

frame.nChannels)

cv.Flip(frame, mirroredFrame, 1)

frame = mirroredFrame

self.newFrame.emit(frame)

@property

def paused(self):

return not self._timer.isActive()

@paused.setter

def paused(self, p):

if p:

self._timer.stop()

else:

self._timer.start()

@property

def frameSize(self):

w = cv.GetCaptureProperty(self._cameraDevice, \

cv.CV_CAP_PROP_FRAME_WIDTH)

h = cv.GetCaptureProperty(self._cameraDevice, \

cv.CV_CAP_PROP_FRAME_HEIGHT)

return int(w), int(h)

@property

def fps(self):

fps = int(cv.GetCaptureProperty(self._cameraDevice, cv.CV_CAP_PROP_FPS))

if not fps > 0:

fps = self._DEFAULT_FPS

return fps

I’ll not go through all this code because it’s not complex. Essentially, it uses a timer (with interval defined by the fps; lines 18-20) to query the camera for a new frame and emits a signal passing the captured frame as parameter (lines 26 and 32). The timer is important to avoid spending CPU time with unnecessary pooling. The rest is just bureaucracy.

Now, let’s see the camera widget itself. The main purpose of it is to draw the frames delivered by the camera device. But, before drawing a frame, it must allow anyone interested to process it, changing it if necessary without interfering with any other camera widget. Here’s the code:

import cv

from PyQt4 import QtCore

from PyQt4 import QtGui

class CameraWidget(QtGui.QWidget):

newFrame = QtCore.pyqtSignal(cv.iplimage)

def __init__(self, cameraDevice, parent=None):

super(CameraWidget, self).__init__(parent)

self._frame = None

self._cameraDevice = cameraDevice

self._cameraDevice.newFrame.connect(self._onNewFrame)

w, h = self._cameraDevice.frameSize

self.setMinimumSize(w, h)

self.setMaximumSize(w, h)

@QtCore.pyqtSlot(cv.iplimage)

def _onNewFrame(self, frame):

self._frame = cv.CloneImage(frame)

self.newFrame.emit(self._frame)

self.update()

def changeEvent(self, e):

if e.type() == QtCore.QEvent.EnabledChange:

if self.isEnabled():

self._cameraDevice.newFrame.connect(self._onNewFrame)

else:

self._cameraDevice.newFrame.disconnect(self._onNewFrame)

def paintEvent(self, e):

if self._frame is None:

return

painter = QtGui.QPainter(self)

painter.drawImage(QtCore.QPoint(0, 0), OpenCVQImage(self._frame))

Again… I’ll not go through all this. The really important stuff is in the lines 24-26, 35 and 39. As stated before, it’s paramount that the widgets sharing a camera device don’t interfere with each other. In this case, all widgets receives the same frame, which in fact is a reference to the same memory location. This means that if a widget modifies a frame, the others will see it. Clearly, this is not desirable. So, every widget saves its own version of the frame (line 24). This way, they can do whatever they want safely. However, to process the frame is not responsibility of the widget. Thus, it emits a signal with the saved frame as parameter (line 25) and anyone connected to it can do the hard work.

It remains to draw the frame, what is done overriding the paintEvent method (line 35) of the QtGui.QWidget class. The relevant lines are 26 and 39. Line 26 forces a schedule of a paint event and line 39 effectively draws it when a paint event occurs (using OpenCVQImage as you can see).

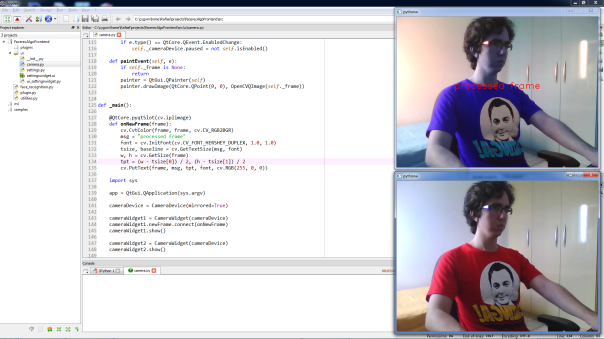

The following snippet shows how to use all this together:

def _main():

@QtCore.pyqtSlot(cv.iplimage)

def onNewFrame(frame):

cv.CvtColor(frame, frame, cv.CV_RGB2BGR)

msg = "processed frame"

font = cv.InitFont(cv.CV_FONT_HERSHEY_DUPLEX, 1.0, 1.0)

tsize, baseline = cv.GetTextSize(msg, font)

w, h = cv.GetSize(frame)

tpt = (w - tsize[0]) / 2, (h - tsize[1]) / 2

cv.PutText(frame, msg, tpt, font, cv.RGB(255, 0, 0))

import sys

app = QtGui.QApplication(sys.argv)

cameraDevice = CameraDevice(mirrored=True)

cameraWidget1 = CameraWidget(cameraDevice)

cameraWidget1.newFrame.connect(onNewFrame)

cameraWidget1.show()

cameraWidget2 = CameraWidget(cameraDevice)

cameraWidget2.show()

sys.exit(app.exec_())

See that two CameraWidget objects share the same CameraDevice (lines 17, 19 and 23), but only the first processes the frames (lines 4 and 20). The result is two widgets showing different images resulting from the same frame, as expected:

Now you can embed CameraWidget in a PyQt application and have a fresh OpenCV camera preview. Cool huh? I hope you enjoyed it =P.

Until next…

A very lightweight plug-in infrastructure in Python

For some applications, run-time extensibility is a major requirement. There are lots of examples out there: browsers, media players, photo editors, etc. All these softwares can be easily extended with new functionality using plug-ins. How is this done?

It seems like complex stuff. Indeed, it really is, specially when you are using a bureaucratic language like Java or digging into the low level with C. However, when there aren’t security concerns, the extensions are of limited scope and a language with great introspection power like Python is being used, this can be a piece of cake =P.

Let’s see… suppose the plug-ins provide their services by means of the following contract interface:

class Plugin(object):

def setup(self):

raise NotImplementedError

def teardown(self):

raise NotImplementedError

def run(self, *args, **kwards):

raise NotImplementedError

Given this, a basic plug-in infrastructure should have as features:

- A way to auto-discover subclasses of

Pluginon-demand at run-time - A centralized way to access these subclasses

Thanks to the black magic of Python metaclasses (I’m assuming you are familiar with them; otherwise, see this excellent SO discussion), it’s very simple to implement those features:

class Plugin(object):

class __metaclass__(type):

def __init__(cls, name, base, attrs):

if not hasattr(cls, 'registered'):

cls.registered = []

else:

cls.registered.append(cls)

...

Now, every time a subclass of Plugin is defined, it is added to Plugin.registered so that there’s a centralized way to access the plug-ins. But the problem of auto-discovery still remains because a plug-in class must be defined to the metaclass trick work, which requires the import of the modules containing the plug-in classes definitions. However, this is easy to fix:

import imp

import logging

import pkgutil

class Plugin(object):

class __metaclass__(type):

...

@classmethod

def load(cls, *paths):

paths = list(paths)

cls.registered = []

for _, name, _ in pkgutil.iter_modules(paths):

fid, pathname, desc = imp.find_module(name, paths)

try:

imp.load_module(name, fid, pathname, desc)

except Exception as e:

logging.warning("could not load plugin module '%s': %s",

pathname, e.message)

if fid:

fid.close()

...

The class method load forces the import of any module found in a path list. Consequently, an explicit import is not needed in order to discover the plug-ins, making the application itself fully decoupled of them.

As an usage example, if you had defined subclasses SamplePlugin1 and SamplePlugin2 of Plugin in some module located at "./plugins/", you could access them this way:

>>> Plugin.load("plugins/")

>>> Plugin.registered

[<class 'SamplePlugin1'>, <class 'SamplePlugin2'>]

Of course, this is extremely simple. There’s no sandbox (which implies security issues) and the plug-ins are passive (the application call their methods, instead of them calling methods of a plug-in API). However, for many programs this is enough and anything more complex would be over-engineer.

That’s it. This is a common problem in software engineering, so I hope this is useful. =)

Interview on Globo TV about facial recognition

Hey there,

with about a month of delay here goes an interview with me on Globo TV (the biggest TV station in Brazil) about facial recognition. The interview is in portuguese… so, sorry the international audience =P. At some time in the future I’ll make the prototype used in the interview available for download.

I’m famous now!! =D=D

Original Source: http://video.globo.com/Videos/Player/Noticias/0,,GIM1562718-7823-BIOMETRIA+FACIAL++PARTE+2,00.html

Comparing objects by memory location in Haskell

Recently I needed to compare two objects for memory equivalence in Haskell. That is, I was looking for a function like:

isMemoryEquivalent :: a -> a -> Bool

The first thing you might ask is: “why the hell are you doing this in Haskell?”. And your surprise is understandable. However, once in a while a project requires you lower the level and go dirty =P.

I was writing a Scheme interpreter (which will be the subject of a post soon) and this need came out in the equal? built-in procedure implementation. This procedure checks if two Scheme objects can be regarded as the same, i.e., if they are equivalent. It’s not difficult to see that this is extremely difficult for procedures (in fact, it’s impossible in the general case).

For example, try to think about an efficient algorithm to compare the following procedures:

(define (succ1 x) (+ x 1)) (define (succ2 x) (- (+ x 2) 1))

One possibility is to check if the domains of succ1 and succ2 are the same. If this was the case, you could evaluate succ1 and succ2 over the domain and compare the results. But this is a naïve approach seldom possible in practice. It assumes the domains can be known (in dynamic typed languages such as Scheme this is impossible). And even if the domains can be known, it should be finite (or practically finite), which is almost always not true. Think about float numbers, arbitrary precision integers, tree-like data structures, etc. Not to mention the efficiency problems that arise when the procedures are costly, e.g., a factorial procedure. Well, this is not exactly new if you know a bit about theory of computation, but think about it concretely makes it clear.

The alternative is relax the definition of the equal? procedure: two procedures are said to be equal if they are stored in the same memory location (their memory pointers are equal). That is, (equal? (lambda (x) x) (lambda (x) x)) must return #f.

But there isn’t native pointers in Haskell. To implement this behavior in the interpreter I need to use the extension infrastructure of the Haskell environment. In my case, the Haskell environment is the excellent GHC compiler and the suitable infrastructure is the Foreign module. Using it, I can implement isMemoryEquivalent as follows:

import Foreign

isMemoryEquivalent :: a -> a -> IO Bool

isMemoryEquivalent obj1 obj2 = do

obj1Ptr <- newStablePtr obj1

obj2Ptr <- newStablePtr obj2

let result = obj1Ptr == obj2Ptr

freeStablePtr obj1Ptr

freeStablePtr obj2Ptr

return result

It’s quite easy to understand this code snippet. It acquires the pointers to the objects, compares, and releases them. This acquire/release process is needed because the garbage collector is free to move objects through memory, invalidating pointers. So, first the object of interest must be locked in a safe memory location before going any further and released at the end. This is accomplished by the Foreign module functions newStablePtr and freeStablePtr, respectively. Another important point to note is the use of the IO monad since pointer operations can be viewed as a kind of IO.

This is quite a specific topic, but it’s instructive about how you can lower the level with Haskell. One interesting thing is that a low level in Haskell is indeed very high =P. Anyway… kids, don’t try this without adult supervision, ok? =P

What do we mean by data after all?

I’m reading Structure and Interpretation of Computer Programs (the famous SICP)… what a fantastic book! I’ve not finished it yet, but until now I can surely say it’s the best book about Computer Science I ever touched since I started my studies in this field.

This book is about abstraction… about how to control the complexity of expressing ideas. And this is, in fact, the real deal of Computer Science: How to formalize ideas of process (the “How To” knowledge) in a manageable way. All these “modern” concepts of first order functions, closures, dynamic typing, message passing (a.k.a. object orientation) are nothing more than instances of a more fundamental one: abstraction.

It’s interesting to see that these “modern” things are not new at all, being well-known to many academics already in the 1970s. If it’s true that a lot of academics sometimes lose the sight of reality, it’s also true that most CS professionals are alienated to the technology, when what really matters for their work is the thinking. If this wasn’t the case, maybe the old things wouldn’t be new today =P. This book opens the mind to this and I’d like to have placed my hands on it a long time ago. Perhaps, I just wasn’t prepared =).

The SICP uses a dialect of Lisp called Scheme to introduce its subject. I’ve always heard wonders about this language from very smart people (e.g. Peter Norvig, Paul Graham, Richard Stallman), and I’ve tried to learn it a few times. But, I couldn’t see why all those smart people revered Scheme. It seemed to me just like another functional language, with an exotic notation and a lack of practical purpose. This bothered me because I really think that if a lot of smart people like something that I don’t, there’s something wrong with me =P. The problem was an extraordinary idea needs an extraordinary presentation to be fully understood and afford that “aha! moment“. I wasn’t exposed to such presentation until SICP.

One of the greatest insights SICP gives is that all in the ideal world of software is process (or procedure, which is the expression of a process), even data. And this vision of data is so confusing and surprising I decided to write a post about it. Let’s see what it means using a SICP example.

Suppose we’d like to develop a rational number library. First, I need to understand what a rational number is: two integers representing the numerator and the denominator. So, in order to operate with rational numbers we’ll need a constructor and selectors for the numerator and the denominator. In Scheme it could be something like:

(define (make-rat n d) ...) (define (numer x) ...) (define (denom x) ...)

From these basics we can implement procedures for a rational number arithmetic:

(define (add-rat x y)

(make-rat (+ (* (numer x) (denom y))

(* (numer y) (denom x)))

(* (denom x) (denom y))))

(define (mul-rat x y)

(make-rat (* (numer x) (numer y))

(* (denom x) (denom y))))

...

The arithmetic procedures see a rational number by means of the constructor and the selectors, which are themselves procedures. Thus, until now, despite we think about rational numbers as data, we are working with procedures. But you can say: “you are tricking me, after all the selectors are working over CONCRETE data”. It seems reasonable… let’s implement make-rat, numer, denom and investigate this. One possibility is to represent a rational number through pairs using the cons, car and cdr Scheme primitive procedures.

(define (make-rat n d) (cons n d)) (define (numer x) (car x)) (define (denom x) (cdr x))

The cons procedure receives two things (doesn’t matter what they really are) and returns a pair. car/cdr extracts the first/last element of a pair. Seeing this you say: “Aha! I was right, there’s a CONCRETE data called pair. Thus, there’s a difference between procedures and data. Constructors and selectors are just a simple abstraction on top of the real concrete data.” But this is not true because cons, car and cdr are just procedures like make-rat, numer and denom. Nothing says pairs are CONCRETE data. Consider the following implementation of those procedures:

(define (cons n d)

(define (dispatch m)

(cond ((= m 0) n)

((= m 1) d)))

dispatch)

(define (car z)

(z 0))

(define (cdr z)

(z 1))

Pay attention to them until you understand. Can you see that “CONCRETE data called pair” is just another procedure? Just a process? It does not exist concretely, it’s an abstraction of the idea of a pair. You may argument that numbers are concrete data… “1, 2, 3… this kind of basic stuff is primitive, there’s no way it can be a procedure” you might say. Indeed, it can. For the natural numbers they’re called Church Numerals because were defined by Alonzo Church in his work on λ-calculus.

This is a little mind-boggling at first since we are used to think about procedures and data as separate complementary things, but they aren’t. If you see a program as a representation of thought and you recognize that data is just a thought, this idea becomes clear. Although this seems like just a curiosity, this notion is extremely important because at the end it’s just abstraction and Computer Science is all about this.

Of course, if data is procedure, can any procedure be seem as data? No… what distinguishes processes that are data from those that aren’t are the restrictions (or axioms) over the processes. For instance, if make-rat, numer, denom defines a rational number it must be true that:

(= (/ (numer (make-rat n d))

(denom (make-rat n d)))

(/ n d))

The same way, for the cons, car, cdr we must have:

(eq? (car (cons a b)) a) (eq? (cdr (cons a b)) b)

To summarize we could define data as: procedures + axioms over them.

PS: An important thing here is the use of Scheme to demonstrate these concepts. Why not use C or Python or any other mainstream language? After all, the behavior of the code presented could be emulated in other languages than Scheme. The point is that Scheme was designed to make these concepts transparent. When you write a Scheme program, you think this way. In fact, this way of thinking is based on a solid elegant theory called λ-calculus (the basis of Church Numerals) and Scheme was conceived to model it. This is the reason Scheme seems so elegant to me. All just fit right. It’s the most orthogonal language I know and this does not make the programmer unproductive, quite the contrary.

OpenCV 2.2 + Webcam + Windows not working

Hey there…

it has been a while since my last post… but I’m here again, although I’ll not stay long =P. This will be a short post… another quick and dirty tip. I’ve been very busy and without the time to post something really substantial. However, I think this can be helpful, so…

These days I had a problem using my webcam with OpenCV 2.2 in Windows. Most of the time I use Linux and never had any problem like this, but last week I needed to use OpenCV 2.2 + Windows for a Computer Vision project and figured out that my webcam simply do not work in this configuration: when I try to create a capture object, a window opens requiring I select the device. However, it does not succeed, even if I select the device correctly.

Oddly, this does not occur with OpenCV 2.1 + Windows. Thus, I concluded it’s a bug in OpenCV 2.2, specifically in the highgui package. Apparently, this version of OpenCV is using a back-end for cameras different from DirectShow, despite OpenCV supports it and, as I know, this is the recommended way of accessing cameras in Windows.

A solution is to force OpenCV to use DirectShow, what can be done by defining the macros HAVE_VIDEOINPUT and HAVE_DSHOW during the building configuration. Just edit the flag CMAKE_C_COMPILER adding the proper options. For example, if you’re building OpenCV using Visual Studio, append “/DHAVE_DSHOW /DHAVE_VIDEOINPUT” to that flag.

Well, this worked for me… I hope it can be helpful to you =).

PS: As a matter of fact, this is a bug in the setup of the OpenCV building, not a bug in OpenCV itself.

Accessing the frontal camera in Galaxy Tab (Android 2.2)

This is just a quick tip for those of you trying to access the frontal camera in Android 2.2. Probably, you’d found out that it’s not so easy as it should be. The Android 2.2 does not have any official support for frontal cameras. In fact, the Android 2.2 does not have ANY official support for more than ONE camera. So, OFFICIALLY it’s not possible to use the frontal camera of your device if it’s Android 2.2 powered. What a shame… seriously… this is a quite basic feature… shouldn’t be that difficult. Fortunately, the Google fixed this in Android 2.3. But, thanks to Android fragmentation, I cannot just upgrade my system and, consequently, I’m stuck with Android 2.2. Again… what a shame… =S

After almost one week of googling, my fellow Hugo discovered a workaround for the particular case of Galaxy Tab (the device we’re using). I’m not sure if it’ll work in other Android 2.2 device… if you try it, let me know the result. So here is what you have to do:

Camera camera = Camera.open();

Camera.Parameters cameraParameters = camera.getParameters();

cameraParameters.set("camera-id", 2);

camera.setParameters(cameraParameters);

The magic is done in the third line with the parameter "camera-id". I think this is self-explanatory, thus I’m finishing here. Until next post and good luck with it =).

UPDATE: it seems this won’t work with camera.takePicture

UPDATE: of course, you need to put the following in AndroidManifest.xml:

<uses-permission android:name="android.permission.CAMERA" /> <uses-feature android:name="android.hardware.camera" />

Controlling FPU rounding modes with Python

Hey folks! In the previous post I talked a little about the theory behind the floating-point arithmetic and rounding methods. This time I’ll try a more practical approach showing how to control the rounding modes that FPUs (Floating-Point Units) employ to perform their operations. For that I’ll use Python. But, first let me introduce the <fenv.h> header of the ANSI C99 standard.

The ANSI C99 standard establishes the <fenv.h> header to control the floating-point environment of the machine. A lot of functions and constants allowing to configure the properties of the floating-point system (including rounding modes) are defined in this header. In our case, the important definitions are:

Functions

int fegetround()– Returns the current rounding modeint fesetround(int mode)– Sets the rounding mode returning 0 if all went ok and some other value otherwise

Constants

FE_TOWARDZERO– Flag for round to zeroFE_DOWNWARD– Flag for round toward minus infinityFE_UPWARD– Flag for round toward plus infinityFE_TONEAREST– Flag for round to nearest

Unfortunately, not all compilers fully implement the ANSI C99 standard. Therefore, there is no guarantee on the portability of code that uses the <fenv.h> header. Considering this caveat, the GCC compiler supports it, which makes it possible to build a Python extension wrapping the features of <fenv.h>. However, this is not a smart way to reach our goal since it would require forcing users to compile the source code of the extension. An alternative is to use the ctypes Python module to instantiate the libm (part of the standard C library used by GCC, typically glibc, that implements <fenv.h>):

from ctypes import cdll

from ctypes.util import find_library

libm = cdll.LoadLibrary(find_library('m'))

The constants that identify the rounding modes are usually defined as macros in <fenv.h> and, therefore, are not accessible via ctypes. We need to redefine them in Python. The problem is that they vary according the processor so that it is necessary to establish some logic on the result of the function platform.processor(). Right now, however, I just have a x86 processor to test, so I will use the constants for it only:

FE_TOWARDZERO = 0xc00 FE_DOWNWARD = 0x400 FE_UPWARD = 0x800 FE_TONEAREST = 0

Now, just call the appropriate functions:

>>> back_rounding_mode = libm.fegetround() >>> libm.fesetround(FE_DOWNWARD) 0 >>> 1.0/10.0 0.099999999999999992 >>> libm.fesetround(FE_UPWARD) 0 >>> 1.0/10.0 0.10000000000000001 >>> libm.fesetround(back_rounding_mode)

But, what about the MS Visual Studio? Well… the standard C library of this compiler, msvcrt (MS Visual Studio C Run-Time Library), does not support <fenv.h>. Nonetheless, MS Visual Studio implements a set of non-standard (quite typical…) constants and functions specialized in manipulating the properties of the machine FPU. These constants and functions are defined in the <float.h> header distributed with that compiler. The following definitions are of particular interest to us:

Functions

unsigned int _controlfp(unsigned int new, unsigned int mask)– Sets/gets the control vector of the floating-point system (more information here)

Constants

_MCW_RC = 0x300– Control vector mask for information about rounding modes_RC_CHOP = 0x300– Control vector value for round to zero mode_RC_UP = 0x200– Control vector value for round toward plus infinity mode_RC_DOWN = 0x100– Control vector value for round toward minus infinity mode_RC_NEAR = 0– Control vector value for round to nearest mode

Analogous to the previous case:

>>> _MCW_RC = 0x300 >>> _RC_UP = 0x200 >>> _RC_DOWN = 0x100 >>> from ctypes import cdll >>> msvcrt = cdll.msvcrt >>> back_rounding_mode = msvcrt._controlfp(0, 0) >>> msvcrt._controlfp(_RC_DOWN, _MCW_RC) 590111 >>> 1.0/10.0 0.099999999999999992 >>> msvcrt._controlfp(_RC_UP, _MCW_RC) 590367 >>> 1.0/10.0 0.10000000000000001 >>> msvcrt._controlfp(back_rounding_mode, _MCW_RC) 589855

For sure, it is not convenient to type all this every time you want to change the rounding mode. Nor it is appropriate to guess the standard C library of the system. But you can always create functions encapsulating this behavior. Something like this:

def _start_libm():

global TO_ZERO, TOWARD_MINUS_INF, TOWARD_PLUS_INF, TO_NEAREST

global set_rounding, get_rounding

from ctypes import cdll

from ctypes.util import find_library

libm = cdll.LoadLibrary(find_library('m'))

set_rounding, get_rounding = libm.fesetround, libm.fegetround

# x86

TO_ZERO = 0xc00

TOWARD_MINUS_INF = 0x400

TOWARD_PLUS_INF = 0x800

TO_NEAREST = 0

def _start_msvcrt():

global TO_ZERO, TOWARD_MINUS_INF, TOWARD_PLUS_INF, TO_NEAREST

global set_rounding, get_rounding

from ctypes import cdll

msvcrt = cdll.msvcrt

set_rounding = lambda mode: msvcrt._controlfp(mode, 0x300)

get_rounding = lambda: msvcrt._controlfp(0, 0)

TO_ZERO = 0x300

TOWARD_MINUS_INF = 0x100

TOWARD_PLUS_INF = 0x200

TO_NEAREST = 0

for _start_rounding in _start_libm, _start_msvcrt:

try:

_start_rounding()

break

except:

pass

else:

print "ERROR: You couldn't start the FPU module"

In this case, the constants and functions to be called, as well as the standard C library instantiated, are abstracted. Just use the constants TO_ZERO, TOWARD_MINUS_INF, TOWARD_PLUS_INF, TO_NEAREST and the functions set_rounding, get_rounding.

So, that’s it… I hope this has been useful to you somehow (I doubt it… :P) or, at least, that it was interesting… Until next time…